New Method of Boosting Speed of Computer Application – 9%

A new method of boosting the speed of computer application by 9% more has been discovered by researchers from North Carolina State University and Samsung Electronics.The developments are the cause of technologies which permit computer processor to recover data much more efficiently.

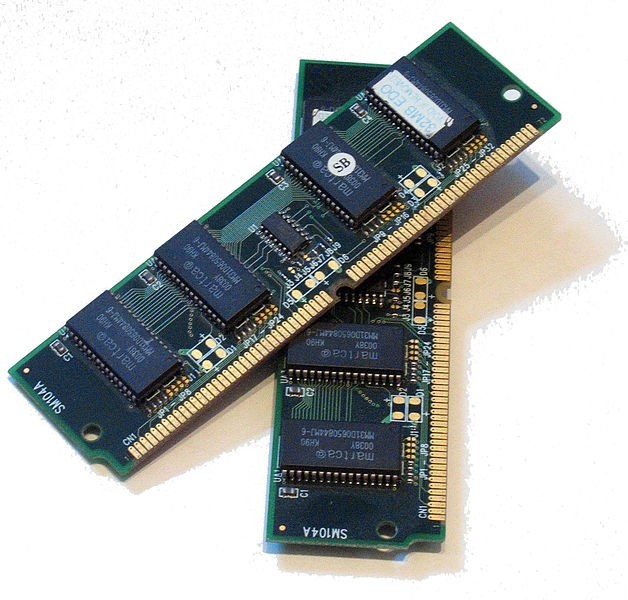

To perform operations, the computer processors need to recover the data from memory which is stored in off-chip `main’ memory. The data which is utilised the most is also stored temporarily in a die-stacked dynamic random access memory – DRAM cache that is placed nearer to the process which can be recovered much faster.

The data in the store is organized in large blocks or macro-blocks so that the processor identifies where to locate whichever data is required. But for any specified operation, the processor may not need all the data in a macro-block and recovering the non-essential data tends to be time consuming.

For efficiency of the process, researchers have established a procedure wherein the cache learns over a period of time which data the processor would require from each macro-block. This enables the cache to perform two things. Firstly it can compress the macro-block recovering only the relevant data that permits the cache to send data efficiently to the processor.

Dense Footprint Cache

Secondly, since the macro-block has a tendency to be compressed, it tends to free up space in the cache which can be utilised to store the other data that the processor would probably require. This approach had been investigated by the researchers known as Dense Footprint Cache in a processor and memory simulator.

On running 3 billion instructions for each application that had been tested through the simulator, the researchers observed that the Dense Footprint Cache had raced up application by around 9.5% in comparison to the state-of-the-art challenging systems for managing die-stacked DRAM.

Die-stacked DRAM technology permits a huge Last level Cache – LLC which offers high bandwidth data admission to the processor but needs a large tag array. This could take a major amount of space of the on-chip SRAM budget.

In order to reduce the SRAM overhead, systems such as Intel Haswell tends to depend on huge block –Mblock size. One disadvantage of large Mblock size is that several bytes of Mblock may not be required by the processor though are drawn in the cache.

Last Level Cache Miss Ratios

Dense Footprint Cache utilised 4.3% less energy. The researcher also observed that the Dense Footprint Cache had a substantial enhancement with regards to `last-level cache miss ratios’. Last-level cache misses takes place when the processors attempts to recover the data from the store though the data is not there thereby compelling the processor to recover the data from off-chip main memory.

These cache misses tend to make the operation far less competent.The Dense Footprint Cache also tends to reduce last-level cache miss ratios by about 43%. The work had been featured in a paper - `Dense Footprint Cache: Capacity-Efficient Die-Stacked DRAM Last Level Cache’ which was presented at the International Symposium on Memory Systems in Washington, D.C. on October 3 – 6.

The lead author of the paper is said to be Seunghee Shin, a Ph.D. student at NC State and the paper had been co-authored by Yan Solihin. Yan Solihin is a professor of electrical and computer engineering at NC State and Sihong Kim of Samsung Electronics.