New Computer Chip Enhances Performance of Data Centre

A new computer chip which has been manufactured by Princeton University researchers tends to enhance the performance of data centres which is at the core of online services from email to social media. The data centres are basically giant warehouses which are overflowing with computer servers that enable cloud based services namely Gmail and Facebook and also store the amazingly huge content which is available through the internet.

The computer chips which are at the cores of the largest server that tends to route as well as process information have a tendency to vary from the chips in smaller servers or the daily personal computers. Designing the chip precisely for massive computing systems, the researchers at Princeton inform that they can significantly upsurge the processing speed while lowering the needs of energy.

The chip architecture is said to be scalable wherein designs can be built which tends to go from a dozen processing units, known as cores, to many thousand. Moreover, the architecture permits thousands of chips to get linked together to an individual system comprising of millions of cores. Known as Piton, after the metal spikes which are driven by rock climbers into mountainsides to support during their ascent, it has been designed to scale.

Display How Servers Route Efficiently & Cheaply

David Wentzlaff, an assistant professor of electrical engineering and associated faculty in the Department of Computer Science at Princeton University commented that `with Piton, we really sat down and rethought computer architecture in order to build a chip specifically for data centres and the cloud.

The chip made, is among the largest chips ever built in academia and it displays how servers could route far more efficiently and cheaply’. The graduate student of Wentzlaff, Michael KcKeown, had provided a presentation regarding the Piton project at Hot Chips, s symposium on high performance chips in Cupertino, California.

The unveiling of the chip is said to be a conclusion on their year’s effort of Wentzlaff together with his students. Graduate student in Wentzlaff’s Princeton Parallel Group, Mohammad Shahrad commented that creating a physical piece of hardware in an academic setting could be rare and very special opportunity for computer architects.

The other researchers of Princeton who have been involved in the project since its commencement in 2013 comprise of Yaosheng Fu, Tri Nguyen, Yanqi Zhou, Jonathan Balkind, Alexey Lavrov, Matthew Matl, Xiaohua Liang and Samuel Payne, presently at NVIDIA.

Manufactured for Research Team by IBM

The Piton chip which been designed by the Princeton team had been manufactured for the research team by IBM. The main subsidy for the project had been provided from the National Science Foundation, the Defense Advanced Research Projects Agency and the Air Force Office of Scientific Research.

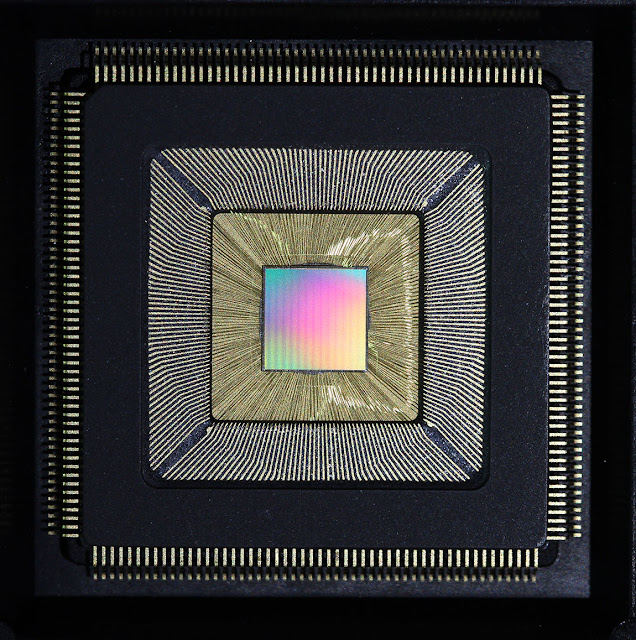

The present variety of the Piton chip tends to measure six by six millimetres and the chip seems to have more than 460 million transistors. Each of them is small around 32 nanometres which are too small to be seen by anything but an electron microscope.

The bulk of these transistors are enclosed in 25 cores which is the independent processor for carrying out the instructions in a computer program. Several of the personal computer chips tend to have four or eight cores. Overall, more cores would mean faster processing times, till the software are capable of exploiting the available cores of the hardware in running operations in parallel. Hence the manufacturers of computer have resorted to multi-core chips to compress additional gains out of conventional approaches to computer hardware.

Prototype for Future Commercial Server System

Companies and academic institutions in recent years, had fashioned chips with several dozens of cores though Wentzlaff states that the readily scalable architecture of Piton tends to permit thousands of cores on an individual chip with half a billion cores in the data centre.

He commented that what they have with Piton is actually a prototype for the future commercial server system which tends to take advantage of a great amount of cores in speeding up the process. The design of the Piton chip is dedicated on exploiting unity among programs running all together on the same chip. One way of doing it is known as execution drafting which works just like the drafting in bicycle racing when the cyclist tends to conserve the energy behind a lead rider cutting through the air and creating a slipstream.

Multiple users at the data centre tend to run programs which depend on identical operations at the processor stage. The Piton chips’ cores has the capabilities of recognizing these instance and executes same instructions consecutively in order that the flow is continuous, just as a line of drafting cyclists. By doing so, it tends to increase the energy efficiency by around 20% in comparison to a standard core, according to the researchers.

Memory Traffic Shaper

A second modification incorporated in the Piton chip packages out when the opposing programs tend to access computer memory which is present off, the chip. Known as a memory traffic shaper, its operations is like a traffic cop at a busy intersection, considering the needs of each program and adapting memory request together with signalling them through suitably so that they do not congest the system.

This method tends to yield around 18% jump in performance in comparison to conventional allocation. Moreover the Piton chip also tends to gain efficiency due to its management of memory stored on the chip which is known as the cache memory. This is faster in the computer and is utilised for accessing information, regularly.

In several designs, the cache memory is united across the entire chip’s cores though that approach could go wrong when multiple cores access and change the cache memory. However, Piton tends to evade this issue by assigning areas of the cache and precise cores to dedicated applications.

The researchers state that the system could increase the efficiency by 29% when it is applied to 1.024-core architecture and estimated that the savings could increase when the system is installed across millions of cores in a data centre. The researchers also informed that these developments could be implemented keeping the cost in line with the present manufacturing standards.